Who is Right?

22nd of October 2015Listening to a colleague present his architecture for a Business Intelligence (BI) platform last week, the words "Single Version of the Truth" fell quite a bit. This “Single Version of the Truth” has been the holy grail of data sciences (as Gartner has dubbed Big Data) for as long as I have known BI to exist. And it got me wondering as to what that actually means within a BI context.

Certainly it is not so that the data warehouses used for this BI approach are considered the master of this data. I would expect this function to be filled in by the operational data stores that provide this aggregated data. Otherwise, it would mean that when a data discrepancy between de data warehouse and the source data store is detected, the data warehouse is correct, and the information in the operational data store should be corrected to tackle this discrepancy.

Wikipedia gives the following definition: Single Version of the Truth, is a technical concept describing the data warehousing ideal of having either a single centralised database, or at least a distributed synchronised database, which stores all of an organisation's data in a consistent and non-redundant form. This contrasts with the related concept of Single Source of Truth (SSOT), which refers to a data storage principle to always source a particular piece of information from one place.

Bill Inmon, as one of the founders of the BI discipline, states several benefits of a “Single Version of the Truth”, of which some are stated here:

- there is a basis for reconciliation;

- there is always a starting point for new analyses;

- there is less redundant data; and

- there is integrity of data, etc

However, there are some issues with attempting to achieve such a lofty goal. In an era where there is an information flood of almost biblical proportions, the truth reduced to a single version is far from a trivial task. Most of these streams are “dirty” data residing in multiple, and often incompatible data sets. The effort to unify and clean up this data would be gargantuan, and could easily outstrip its usefulness. The truth is also a moving target. Information does not simply stop happening, and all a warehouse can hope to attain is a snapshot of this truth. And finally, there is the act of trying to comprehend the entire elephant. Sometimes we need to slice and dice information in order to make it more manageable and understandable.

I tend to agree with this point of view. There might by an idyllic version of the truth (as alluded in Plato’s Cave), but it is something to strive for, and never to reach. Knowing there are multiple facets of the truth we cannot hope to unify, and deriving insights from this, takes the exercise from the academic objectives into the realm of the pragmatic. But in order to determine these facets of the truth, there still needs to be a determination for each of these facets where the master data will originate from in order to aggregate to this single facet of the truth. So, already for this reason alone, identifying the master data sources of a solution or an enterprise capability should be part of any exercise on how to attack EIM.

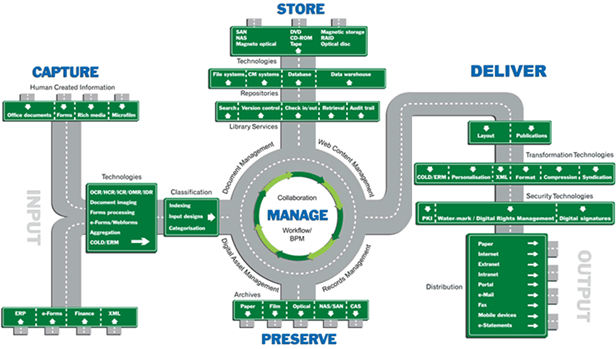

So fulfill your due diligence, and decide for each stream of data pertaining relevant business entities (or even for the trivial ones) the following items (neatly packaged in the illustration below):

1. Capture all inputs needed, and how they enter past the boundaries of your organization

2. Manage all these flows into useful information

3. Store all relevant information and determine the appropriate infrastructure to support these repositories

4. Have a system for preservation: archiving, purging, and the like so the information remains useful as long as your organization might need it

5. Make sure you can deliver the needed information to those stakeholders who need it and when they need it

| Thought | EIM |