Keynotes bpmNEXT 20191st of Februari 2020 |

|

Having skipped the review of BPMNext 2018 due to not finding the time, the videos of BPMNext 2019 got me crawling into my pen again. And just as with the 2017 edition, I will structure what I learned of the course of the various keynotes by the topics summed up in Nathaniel Palmer’s opening talk. I like how he opened with his annual “What will the next five years of Business Process Management bring us?” with a quick test of which predictions he made in 2015 during that opening keynote. Sort of a report card on how his views on the future stack up against reality. To reminisce: He coined the term “intelligent automation” as the elevator pitch for processes becoming adaptive, data-driven and goal-oriented. Looking at the current state of affairs, he went three for three in this prediction.

His predictions over the following years introduced the 3 R’s (Rules, Relationships and robots) as categories of ongoing developments for the field. And we can safely say the with RPA and AI, the robots are here! They are driven by processes and are actively participating in them. They have gone from fringe instances to mainstream over the last two years and have even infiltrated the homes of the end user where names as Siri or Alexa are frequently being called out to provide information or perform tasks. They are gathering under the banner of “conversational interaction interfaces”.

The 2019 predictions of Nathaniel Palmer are the following:

- By 2022 Robots and Digital Workforce will make up for 50% of all commercial transactions and production work.

- By 2022 70% of Commercial transactions will occur on 3rd party, cloud-based systems outside of the direct control of the organization.

- By 2022 80% of all user interactions will be on devices and interfaces other than smartphones and laptops.

Other parties predict the same outcomes, but with more conservative numbers. Gartner indicates a 15% of all customer interactions to be conversational by 2021, which is when compared to the numbers of 2017 still a 400% increase. The importance of this new form of customer interaction cannot be understated though. Gallup indicates that customer retention constitutes 23% of profitability and revenue for companies, meaning a 2% increase in customer retention equates to a 10% drop in operational costs when looking at the bottom line. However, more and more customers do not want to be bothered with interaction-intensive communication such as fidelity programs or social media interactions. Speed and convenience are winning over price and brand. And conversational interfaces remove a lot of the friction customers are experiencing with current day interactions.

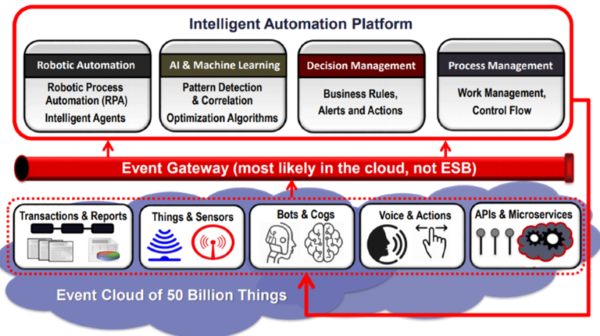

Nathaniel Palmer’s 3 categories coupled with his predictions strongly influence his proposed schematic for a complete process automation platform as seen below. But how to depict these new trends when they pop up in our processes? The triad of BPMN + CMMN + DMN should suffice, even if those last two can still use some maturing in both syntax and use in existing tools. But these relationships are getting warped by intelligent automation, giving us curve balls when it comes to how processes should and do execute.

Nathaniel Palmer's View on Modern BPMS Framework (2019)

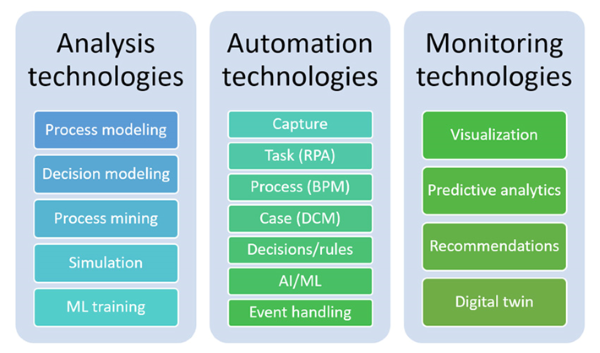

In a similar fashion, Sandy Kemsley proposes the following capabilities to be available for any BPM efforts within an enterprise in a recent blog post for Trisotech, titled “The Case for a Business Automation Center of Excellence”. These capabilities should be developed, maintained and improved by a dedicated group commonly referred to as a Center of Excellence (CoE). Such a CoE is also one of the capabilities Roger Tregear proposes to develop in his book “Reimagining Management”.

R is for Relationships

The main idea for the Relationships category is that process automation is evolving from being heavy on the integration part of the process equation to processes that know where and how to get the data/information and context they need for their execution. So says Nathaniel Palmer (paraphrased): The bread and butter of the process is still sequences and decision points, but intelligent automation does provide a hair in that butter as it give you processes prescribed by rules whose executions are affected by event and their execution path is determined by context.

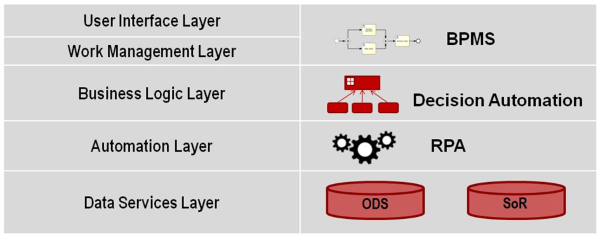

Intelligent automation can be seen as the next step in process automation, where the use of business rules and conventional process management components is augmented by using software robots and artificial intelligence. No longer does the BPMS support interactions with humans, but it will also alleviate their workload by delegating tedious tasks to these robots. The synergies between this tried-and-true practices and the “brains” of the AI can be seen in the proposed layers for the next generation BPMS.

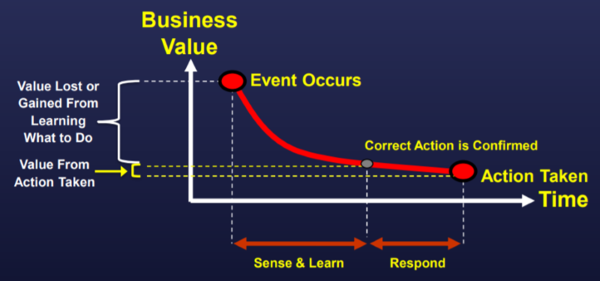

Intelligent automation requires a rethinking of how to approach tasks, no longer making a distinction between the actual task and what supports it. It also makes us think on who needs to perform that task. The main challenge will be to reduce the time it takes an organization for turning Black Swan events which disrupt its processes in unexpected ways into actionable events that can boost the overall efficiency and effectiveness of those processes. And this will enhance the value derived from the resulting actions taken. And this learning derived from such events is what will assist in correctly navigating these types of events.

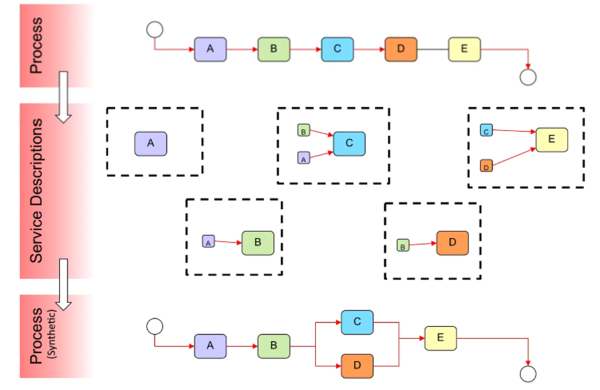

Keith Swenson’s keynote was all about the Emergent Synthetic Process. The main premise is that once a process has been designed with all involved stakeholders these processes are most of the time somewhat cluttered. And since it came about as a consensus between a sizeable set of stakeholders, it becomes very hard to change/improve the process afterwards. The synthetic process comes from the practice of splitting up these processes into singular tasks (what he calls service descriptions) only characterized by their required preconditions. Then at the start of a process instance, based on these preconditions, a context-based “synthetic” process definition is determined just for this instance. This aligns nicely with Nathaniel’s vision for context-based process executions where we can actually see a GPS-like behavior of the executors by going through each process instance in the most pertinent way (either for speed of execution or some other stipulated factor).

Although the analysis of a process in this fashion is a bit more complicated and counter-intuitive, there are several benefits in approaching processes this way:

- Service descriptions can be changed at any time without needing a consensus of all stakeholders, so long as the existing preconditions don’t change.

- Since service descriptions are an explicit description of their requirements, the synthetic process always meets the requirements of all stakeholders.

- Synthetic processes are optimized for each context and individual process execution.

Jim Sinur tells us however not to discount the importance of technology combinations that can really deliver business and customer value. If used separately, technology markets create silos tin which a zero-sum game is played by its suppliers. He details the need to move to an ultimate Digital Business Platform. In order to find this best-of-breed hybrid of technologies to achieve such a platform, the following capabilities are to be considered:

- Artificial Intelligence/Decision Automation/Machine Learning

- Analytics/Data Mining/Data Architecture

- BPM/Process Development/Automation

- Collaboration/Content Management

- Business Applications/Models (i.e. Customer Journey Mapping)/Components

- Cloud/Integration

- Internet-of-Things/Edge Hardware

When leveraging these collaboration trends, the best approach is to start with short term benefit combinations all the while aiming for this ultimate platform. This will allow you to balance customer experience with the needed degree of operational excellence.

R is for Robots

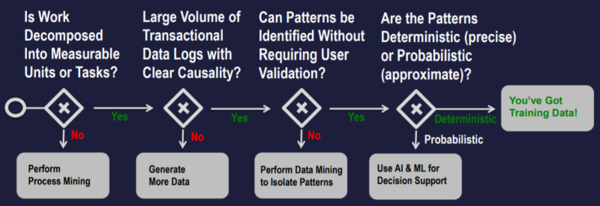

Robots and Artificial Intelligences can be leveraged to achieve great gains in process automation, but they are only as good as the training data they are given. Machine Learning requires large clean datasets that are predictive in nature in order to yield a high confidence in predictability. 40% of all RPA initiatives fail over not choosing the appropriate processes. Nathaniel Palmer shows us a simple sequence flow to determine where such proper data can be found.

This sequence segways into Nathaniel Palmer’s 7 Habits of Intelligent Automation:

- Robots Require Rules: BPM and Decision Management should drive RPA

- Focus on how to access and act on external data

- Separate what supports a task from the definition of the task

- Design processes around outcomes and not around performers

- Adaptive processes are driven by context: Events, Rules, and Patterns

- Create a learning loop to recognize and determine the best course of action

- AI and Machine Learning requires well understood training data

Malcolm Ross states it plainly using the current day buzzwords in a pragmatic view: BPM unites RPA robots with people, streamlining their collaboration, and AI optimizes the process outcomes. However, there is a caveat: As automated tasks are processed faster through RPA, the resulting load of human tasks might become a new bottleneck for the process. Process mining techniques can be applied to detect the proper business rules to steer the process in a structure that aims to prevent these new bottlenecks. Or processes can be cut up into micro-processes that are linked to each other using cases (CMMN) that represent the stages the end-to-end process goes through, with the sentinels guarded the progression. This is what the people at Flowable call the “intentional process”.

R is for Rules

There are lessons to be learned from Lloyd Dugan’s experiences in the RPA automation business. When designing processes where RPA plays a part, the use of decision logic can be used to determine when to assign an RPA worker to a task, and when to let a human worker handle it. This can be done based on complexity of work, expertise needed and accountability of the performed task. I would add to this an additional parameter: sensitivity of the subject. This determination of RPA task suitability is made using the Event-Condition-Action paradigm (ECA). To pour this into a BPMN design, the RPA actor is considered a different role (swim lane) and as such, a visualization of which tasks could be handled by the RPA robot is presented. This is important to combat “Lost in Translation” symptoms where tasks are neglected for a long time by human workers with the assumption the RPA robot will handle them.

Another consideration is that RPA automation is very similar in spirit to Straight-Through-Processing (STP), and should value the best practices of this discipline: In other words, the automation design should account for ways to do a graceful stop/soft fail when the RPA robot can no longer finish its process for whatever reason. This should ideally happen without relying too much on rollback and compensation actions.

R is for Remote

Not one of the R’s Nathaniel Palmer introduced, but a lot of information could also be gathered on how to go about linked the Serverless approach to BPM. This could be construed as part of the Relationship topic, and even as a synergy or tech combination (dixit Jim Sinur), but I wanted to make it a separate block, since there is so much brewing in this technology sphere. Definitions are important, so here’s a definition for Serverless as stated by Forrester:

BonitaSoft’s keynote specified that in order to properly link Serverless with your BPM approach, four principles should be addressed in order to maximize the pros of serverless architectures (no squandered idle resources, linear cost, isolation and parallelism of services) over the cons (greater complexity, asynchronous nature of service calls):

- Should be transparent to end users: Scaling and remote calls to Functions-as-a-Service should not be noticeable to the users interacting with the automated business process.

- Should be automatic and dynamic: There should be next to no interactions of administrators with the scaling platform in order to make it as smooth as possible.

- Should be under cost control: As the remote platform charges for its use in relation to the usage, one should be aware of boundaries in budget and consumption while these remote services are being used.

- Should be generic to all process types: Here I would argue that leveraging remote services should be no different than the standard service task calling a local service.

Sandy Kemsley starts from a straightforward statement: As the years go on, technology keeps alternating between best-of-breed solutions and monolithic development. An approach is needed to deal with this swinging of the pendulum in order to alleviate the troubles that arose from monolithic systems in the past, and the tendency of modern-day development to try and work around this legacy code. SOA and N-Tier architectures tried to fix the same hurt in the past but ended up creating monolithic, highly interdependent layers of their own. Often with even more potential for destabilizing the IT landscape. In much the same way, BPMS is a centric component that presupposes process orchestration at the top level of the applications landscape. The goal is obvious: Business Agility requires technical agility, which is difficult to achieve with such architectures.

If we want to be able to change components more easily, we need to tackle the issues that arise from typical monolithic development:

- There is no single owner (of process of application).

- There is no development team with an overall code view.

- Any upgrade requires large-scale redeployments.

In essence, we need to create components that represent discreet, self-contained business capabilities. These should be loosely coupled, and independently developed and deployed. Businesses should aim to create and use best-of-breed micro services (some of them created themselves, others used from third parties). They should always be able to swap them out should something better come along.

R is for codeR

A term that still seems to roam the discipline is that of the Citizen Developer. The low-code or even no-code promise seems to be hampered by 4 observations, much like the myths of BPM that were coined by Sandy Kemsley a few years back:

- The processes are too complex to be “programmed” by the Citizen Developer.

- The Citizen Developer doesn’t get the nuances of BPM.

- The enabling platform would be too scaled down in functionalities to support the Citizen Developer activities.

- It only works for small organizations, not for enterprises.

Neill Miller from KissFlow gave a demo of their product supporting the activities of the Citizen Developer, but I wasn’t entirely convinced he managed to disprove these four points.

Conclusion

In all, this edition was very interesting, with a lot of new concepts and paradigms seeing the light. The “synthetic process”, the “intentional process”, applications of deep/machine learning and how to react to black swan events: all of these captured my imagination and reinforced my belief that BPM will be here to stay for at least a few years more.

| Review | BPM | SOA |